The Macro: The LLM Plumbing Problem Nobody Wanted to Have

Somewhere around the fourth time a dev team rewrote their OpenAI integration because Anthropic dropped something better, the industry quietly admitted it had a routing problem. The promise of the LLM boom was intelligence-as-a-utility. Call an endpoint, get smart output, ship product. The reality is a sprawling, constantly-shifting catalog of models from OpenAI, Anthropic, Google, Mistral, Cohere, and whoever pushed a new fine-tune to Hugging Face last Tuesday, each with different APIs, different pricing structures, different rate limits, and different failure modes.

The pain is real and specific. Teams building on top of LLMs are making bets on individual providers that can go sideways fast. A model gets deprecated. A rate limit kicks in during a traffic spike. A cost structure changes with a blog post and two weeks’ notice. What this created, predictably, is a new category: the LLM gateway. Tools that sit between your application and the model layer, handling routing, fallback, load balancing, and observability. LiteLLM is probably the most-cited open-source option. Portkey and Helicone have staked out positions on observability and cost tracking. Martian made routing-by-task its whole pitch.

The category exists. The problem is validated. Players already have traction.

ZenMux is walking into a room that isn’t empty, which means whatever they’re doing differently has to actually be different, not just differently described. The timing argument sells itself at this point. The question is execution and differentiation, and for ZenMux that differentiation is a specific, contractual promise most competitors haven’t touched.

The Micro: The Interesting Part Is the Liability

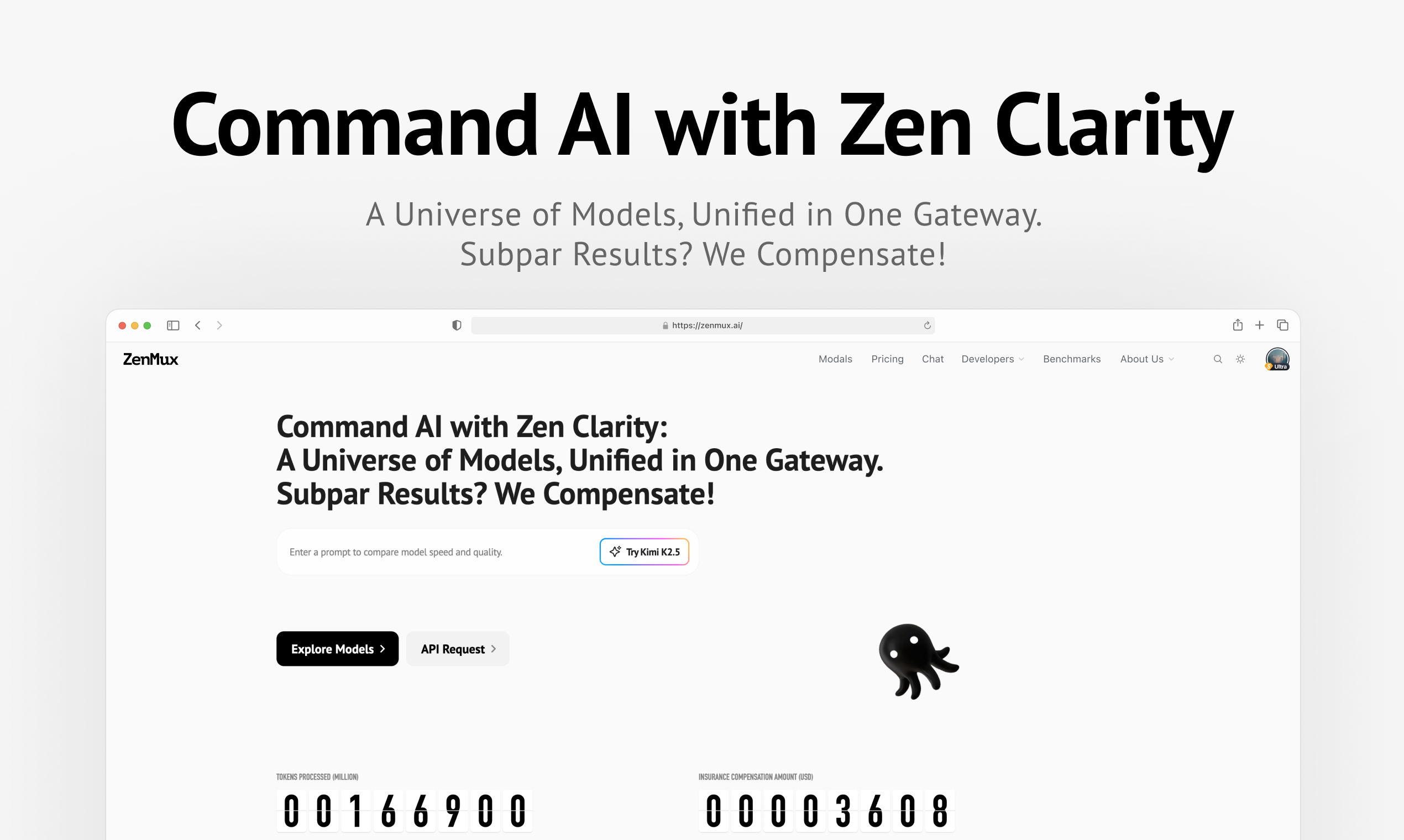

At its core, ZenMux is doing what every LLM gateway does: unified API, smart routing across providers, one account to manage instead of five. You point your app at ZenMux, configure your preferences, and it handles model-selection and failover logic behind the scenes. For a team already manually wiring together multiple providers, this is genuinely useful and not a hard sell.

The thing that makes ZenMux worth writing about is what they’re calling automatic compensation.

The claim is that when ZenMux fails to deliver, timeouts, errors, service degradation, it compensates users automatically. According to the product’s own positioning, this is industry-first territory, and honestly that framing is probably accurate. SLA-backed compensation in the API middleware space is not common. Most tools give you an SLA on paper and a support ticket in practice.

The specifics of how compensation is calculated, what triggers it, and what the ceiling is, those details aren’t fully surfaced in what’s publicly available. That’s exactly what I’d want to know before calling this a genuine differentiator versus a well-positioned marketing claim. The devil in any compensation mechanism is the fine print. Always.

It got solid traction on launch day, with strong comment volume suggesting people were actually engaging with the product rather than just clicking upvote on a maker’s prompt. According to LinkedIn, the team includes Ming Jia as VP of Engineering and Olivia Ma as co-founder and head of growth, with prior experience at Ant Group and Agora. That’s a credible distribution of skills for a developer-tools company. Someone who can build it, someone who can explain it to the market.

The Verdict

ZenMux has a real product addressing a real problem. The automatic compensation angle is genuinely interesting, not because compensation clauses are magic, but because making one publicly is a commitment that changes how a team thinks about reliability. You don’t build a payout mechanism and then let your uptime slip.

What makes this work at 90 days: the compensation terms get published clearly, a handful of credible engineering teams share actual production experience with the routing and fallback behavior, and the pricing holds up against LiteLLM, which is free and self-hostable. That comparison will come up constantly.

What makes this stall is a shorter list. If the compensation mechanism has enough carve-outs to be mostly ceremonial, if the smart routing logic is just round-robin with extra steps, or if the team can’t close the gap between developer curiosity and enterprise procurement cycles, it stalls. Enterprise infrastructure procurement is slow. That’s not a knock on ZenMux specifically. It’s just the terrain.

My actual read: this is probably the right product for mid-size engineering teams already juggling two or three providers who want managed fallback without standing up their own gateway. I’d be more skeptical about large enterprises with existing vendor relationships and legal teams who will absolutely read the compensation terms in full. ZenMux found a real angle and launched it with conviction. Whether that conviction survives contact with actual enterprise SLA negotiations is the only question that matters now. I’d read the docs before I signed anything.