The Macro: AI Agent Tooling Is Fragmented and Nobody Has Fixed It Yet

Eighteen months of AI coding agent releases produced a genuinely useful mess. Cursor has its rules. Claude Code has its skills. Copilot has its extensions. Windsurf does its own thing. Each tool is good at what it does and completely unaware the others exist. The practical result is a developer class that’s technically more capable than ever and also maintaining a small personal bureaucracy of config files just to function.

The open source services market has real momentum behind it. Multiple analysts put the 2025 figure somewhere between $35 billion and $39 billion, growing at a CAGR in the 15–17% range depending on the source. The variance in those estimates is wide enough to raise an eyebrow, but the direction isn’t seriously disputed. Developer tooling is where a meaningful chunk of that growth is happening.

Skillkit’s competitor field is still forming. Platforms like Toolient and Skills Hub are reportedly working on adjacent problems, organizing AI coding tools and tracking proficiency, but neither has landed with the same specificity. There’s community-level experimentation happening around Claude skill distribution, and at least one unrelated GitHub repo also called skillkit (a Python agent skills project) that makes search results fun to navigate. The naming situation is, charitably, chaotic.

What the space is still missing is the thing npm did for Node. A single, boring, reliable way to say “I want this capability, install it everywhere I work.” Nobody has built that for AI agent skills in a way that has actually stuck. Skillkit is betting they can be the first one to matter.

The Micro: 44 Agents, One CLI, and an Architecture Worth Understanding

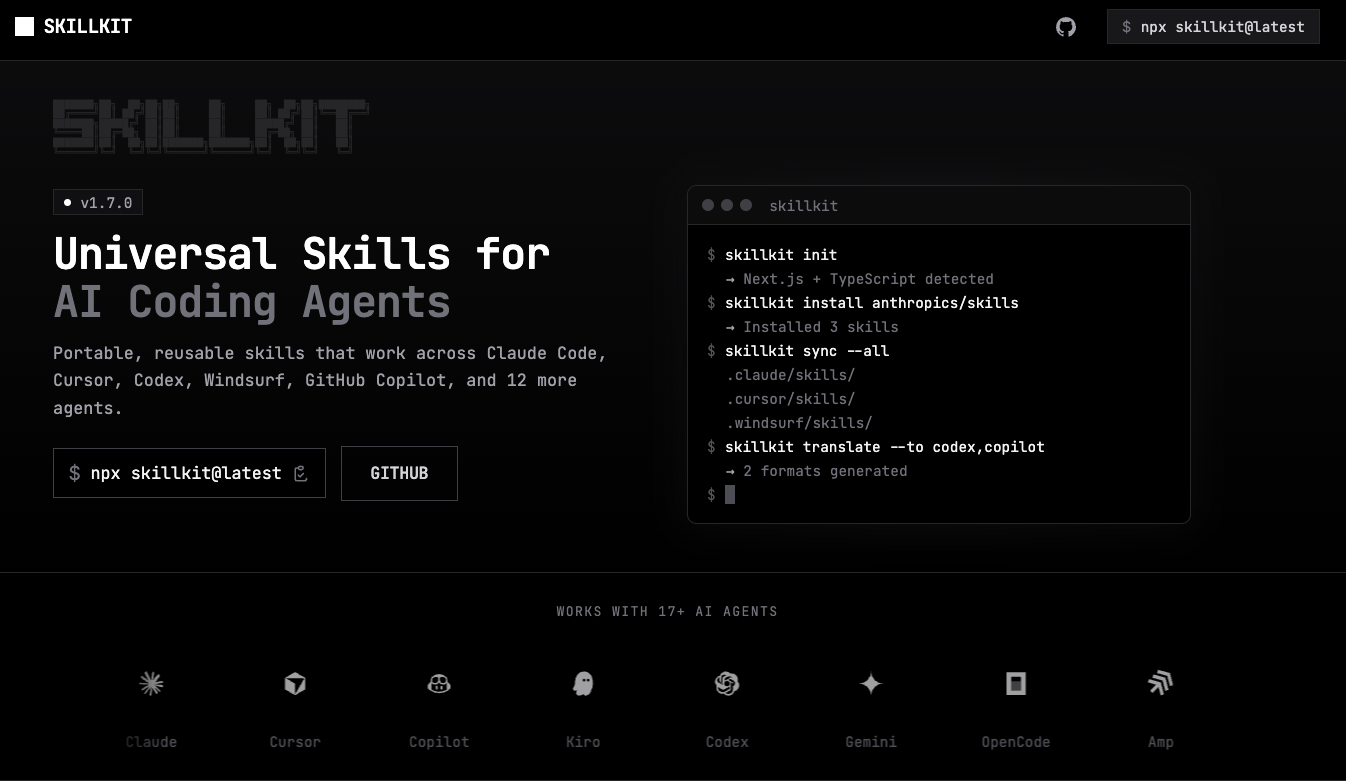

The core pitch is explicit: Skillkit is a package manager for AI agent skills. The npm comparison is intentional and right there in the franding. You run npx skillkit@latest and it handles the rest. No account required. Everything local. Zero telemetry, which they’re leading with because developers in 2025 are, correctly, suspicious of anything that phones home.

The three components are worth looking at separately.

Primer auto-generates agent instructions. Instead of hand-writing context files for every tool, you describe what you want once and Skillkit handles translation. Memory persists learnings across sessions, which addresses something that’s actually annoying: every new Claude Code session starts fresh unless you’ve done the manual work to carry context forward. Mesh handles distribution across team networks, which is where this stops being a solo-developer utility and starts being something an engineering org could standardize on.

The format translation claim is the most ambitious part. The product website lists support for 44 agent formats. An earlier LinkedIn post from someone associated with the project cited 17 agents, which suggests either fast iteration or some flexibility in what counts as “supported.” The current site lists Claude, Cursor, Copilot, Windsurf, Kiro, Codex, Gemini, Goose, Cline, Continue, and others. The count is plausible.

The GitHub repo (rohitg00/skillkit) also references 15,000+ skills available via the platform, per one source. That number appears in only one place, so I’d hold it loosely.

It got solid traction on launch day. The open source and GitHub topic tags are doing real work there. This is exactly the audience that will run npx skillkit@latest just to see what happens.

The Verdict

Skillkit is solving a real problem with a sensible approach. The fragmentation of AI coding agents is genuinely irritating, and the package manager framing is smart because developers already have a working mental model for it.

That said, the history of “universal adapter” tools is not encouraging. Plenty of projects have handled seven integrations gracefully and then buckled under the weight of maintaining forty-four moving targets. Every time Cursor ships a new rules format or Claude Code changes its memory behavior, Skillkit has to ship an update. That’s not a criticism exactly. It’s the job they signed up for. It just means the 30-day question isn’t really “does this work.” It’s “how fast do they ship fixes when something breaks downstream.”

At 60 days, Mesh is the thing worth watching. Solo developer tools are easy to abandon. Team infrastructure is stickier.

At 90 days, I’d want to know whether any engineering teams have actually standardized on this, or whether it’s still primarily individual power users. That distinction matters a lot for what kind of company this becomes.

The zero-telemetry, no-account-required positioning is genuinely good. The ambition is real. This is probably a solid fit for individual developers who are already deep in multi-agent workflows and want something that reduces the config management overhead. I’m less convinced it’s ready to be team infrastructure at any real scale, not because the idea is wrong, but because 44 integrations is a serious maintenance surface for an early-stage open source project. The concept earns real respect. The execution is still being tested.