The Macro: AI Gave You a Canvas, Then Locked It

The AI productivity tools market hit roughly $8.8 billion in 2024 and is projected to reach $36 billion by 2033. That’s a 15.9% CAGR, which represents a lot of venture capital and a lot of optimism about what software can do for knowledge workers who hate doing things manually. Graphic design sits squarely in the middle of that story.

The dominant narrative around AI design tools has been generation. The magic moment where a text prompt becomes a polished visual. Canva added AI features. Adobe Firefly exists. Tools like Midjourney and DALL-E produce genuinely impressive imagery. But there’s a structural issue baked into most of them: the output is either a raster image you can’t meaningfully edit, or it’s generated into a template system where the AI’s choices are more or less final.

You get what you get. Then you either use it or start over.

That’s a real gap, and it’s not subtle. Marketing teams, founders building pitch decks, social media managers producing weekly content, none of them are looking for a one-shot image generator. They want something closer to a junior designer who can start the work and then let them finish it. The output needs to live inside a workflow, not outside it.

Canva’s model captures enormous market share but hasn’t fully solved the generation-to-editing handoff. Figma operates further up the sophistication stack and is less interested in the non-designer use case. The space between them, AI-native generation that lands in a real, layered, editable canvas, is where several startups are currently placing their bets. Moda is one of them.

The Micro: What “Fully Editable” Actually Means Here

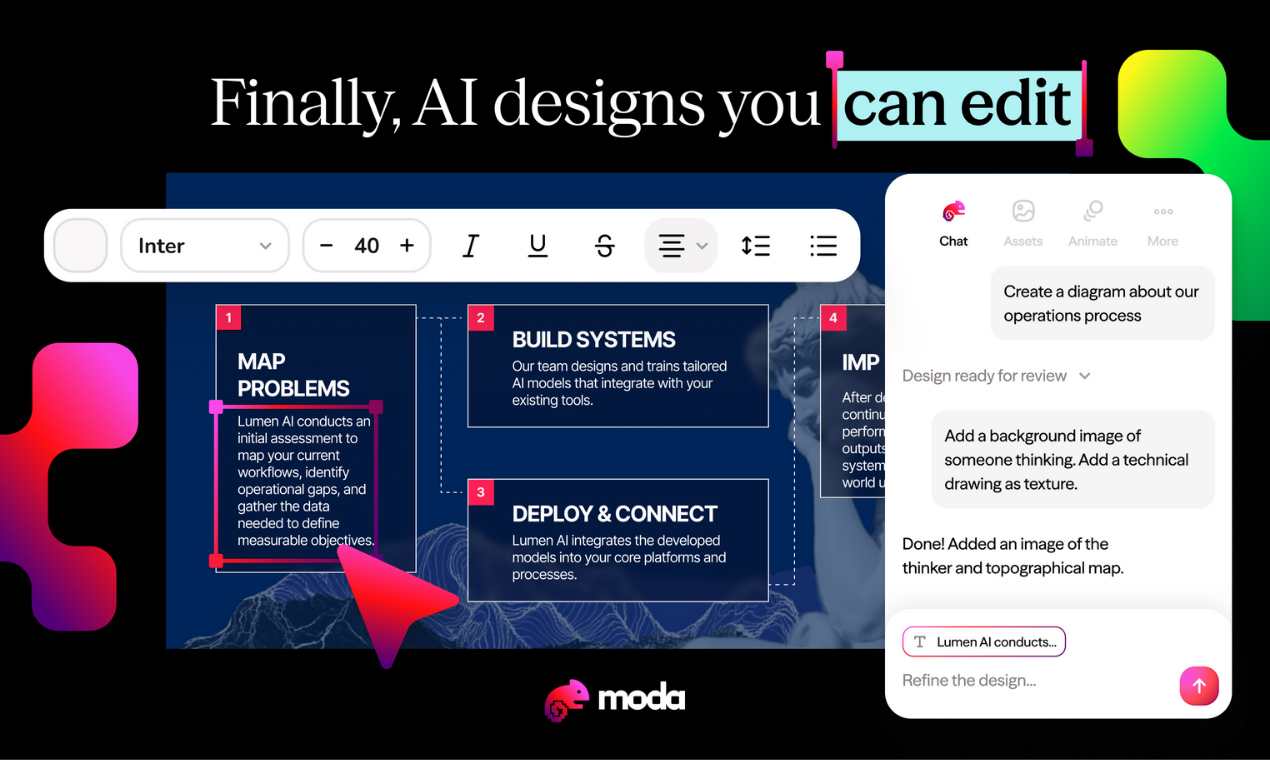

Moda’s core pitch is straightforward. You describe what you need, a slide deck, a social post, a hiring poster, an event invite, and the AI generates a design that drops directly into a layered canvas you can edit like a normal design tool. The supported formats include slides, social content, PDFs, diagrams, and UI mockups. That’s a wider surface area than most single-tool competitors attempt at launch.

The “layered canvas” framing is doing real work in that pitch.

It’s the direct answer to the most common complaint about AI design output: you receive a flattened, locked image instead of something you can actually modify. Whether Moda’s layer system is as fully featured as Figma’s or closer to Canva’s more constrained editor isn’t something their website resolves cleanly. But the explicit positioning around editability suggests it’s a genuine product priority rather than a marketing add-on.

The brand-alignment angle is the other notable bet. Moda claims the AI is “trained in graphic design” and produces “brand-aligned” output, which implies some mechanism for ingesting or applying brand assets, colors, fonts, logos, before generation. This is the kind of feature that separates tools built for one-off use from tools with a shot at becoming infrastructure for a marketing team. Those are very different products, even if they look similar at first glance.

It got solid traction on launch day. Moda is reportedly a YC W26 company based on the co-founder’s LinkedIn, which adds institutional context without guaranteeing anything about the actual product.

The “remix” gallery on their homepage, prebuilt designs for quarterly stats, fundraising announcements, conference speakers, is a smart on-ramp. It demonstrates output quality without requiring a signup. That’s a more honest form of product marketing than most AI tools manage.

The Verdict

Moda is solving a real problem with a specific thesis: generation without editability is a dead end for professional use cases. That’s correct. The question is execution depth.

At 30 days, the signal to watch is retention among non-designers. The YC pedigree and launch reception get them in the door with early adopters. But if the editable canvas turns out to be a simplified layer system that frustrates anyone with real design expectations, while simultaneously confusing people who’ve never opened Figma, Moda lands in an awkward middle that’s hard to market out of.

At 60 days, the brand-alignment feature becomes the real differentiator test. If a 10-person startup can genuinely onboard their brand kit and produce consistent social content without a designer in the loop, that’s a retainable use case. If it requires enough manual adjustment to feel like the generation step wasn’t worth it, the pitch breaks.

I’d want to know how the AI handles brand kits in practice, what the layer system actually supports versus a polished demo, and whether output quality holds across formats or peaks in slides and falls apart in something like diagrams or UI mockups.

This is probably the right tool for a small marketing team that needs to move fast and doesn’t have a dedicated designer. I’m skeptical it works for anyone with strong design opinions or complex brand requirements. The underlying bet, that the edit button is the actual product, is right. Whether Moda built it well enough is what the next 90 days will answer.