The Macro: AI Agents Are Flying Blind and Everyone Knows It

The infrastructure for running AI agents in production is about two years behind the hype for building them. Everyone’s spawning sub-agents, wiring up memory, scheduling cron jobs, and then squinting at log files hoping nothing expensive is quietly burning in the background.

Observability as a category has been here before. Traditional software had the exact same problem. You could build a distributed system faster than you could understand what it was doing, and that’s what eventually produced Grafana, Prometheus, Datadog, and the rest of the monitoring stack backend engineers now take for granted. The open-source services market supporting all of this is substantial. Multiple research firms place it between $35-39 billion in 2024-2025, growing at roughly 15-17% annually through the next decade. The variance in those numbers is suspicious. The direction is not.

The specific gap ClawMetry is targeting is narrower and newer. OpenClaw has its own set of alternatives already, NanoClaw, ZeroClaw, TrustClaw per a Reddit thread from the LocalLLaMA community, which tells you the framework layer is fragmenting fast. That fragmentation is actually good news for purpose-built tooling. None of the general-purpose observability platforms are going to meaningfully care about token costs or sub-agent spawning behavior anytime soon. Grafana doesn’t know what a memory change event is. Datadog charges you by the metric.

The window for something like ClawMetry is real. Whether this particular implementation closes it is a separate question entirely.

The Micro: One Command, Then Suddenly You Can See

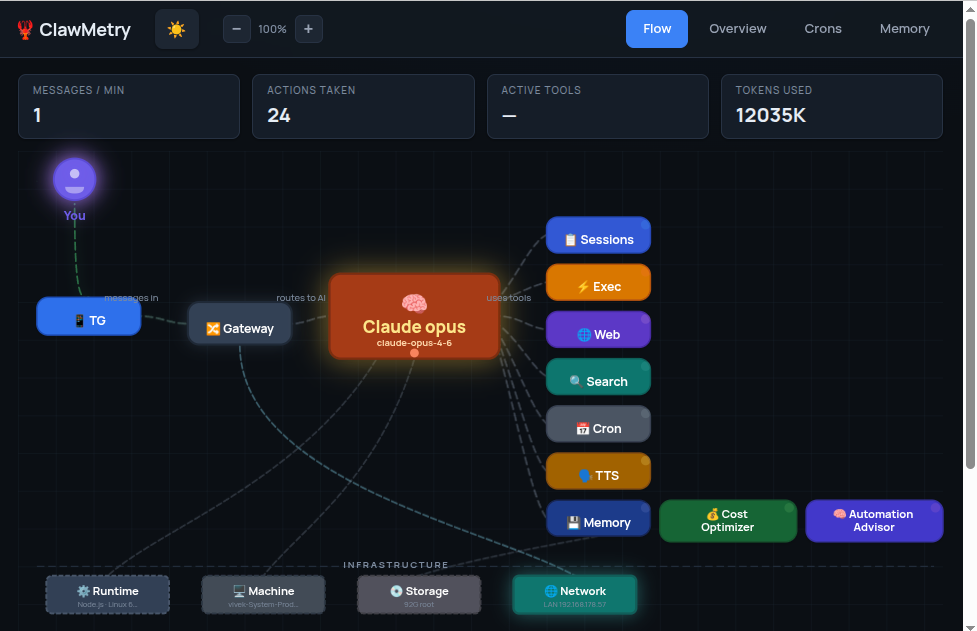

ClawMetry is simple to describe. Run pip install clawmetry, run clawmetry, and you get a real-time dashboard showing what your OpenClaw agents are actually doing. Token costs, sub-agent activity, cron job status, memory changes, session history. All live, all in one place.

The product website shows a flow visualization interface that maps agent activity visually rather than dumping it into tables. That’s a deliberate design choice, and probably the right one. Agents running in parallel are genuinely hard to reason about in a spreadsheet view.

The install story is aggressively low-friction. There’s a curl-to-bash installer, a pip package, PowerShell and CMD options for Windows, and it reportedly runs on Raspberry Pi. Zero config is the claim. The free onboarding offer, no upsell according to the site, suggests they’re either confident in the product or aware that adoption is still early. Possibly both. The managed instance option shows they’re thinking about the full range from a solo developer running weekend experiments to a team that doesn’t want to touch infrastructure.

The GitHub repo is public under vivekchand, who according to LinkedIn works on production AI systems at Booking.com. That matters when you’re piping an install script directly into bash. You are reading that install command carefully, right?

It launched on Product Hunt and got solid traction on launch day. For a developer tool in a specific niche, that’s meaningful signal. Not a viral consumer moment, just real interest from people who actually build with OpenClaw.

It’s free. It’s open source. The barrier to trying it is genuinely minimal.

The Verdict

ClawMetry is doing something that should exist, and it’s approaching it with the right instincts. Open source, zero-friction install, cross-platform, free. Those aren’t small decisions. A paid-only, cloud-only, macOS-only version of this would have been easier to build and significantly worse for everyone trying to use it.

The risk isn’t that the product is bad. The risk is that it’s tied to one framework.

OpenClaw has momentum right now, but the agent framework space is reshuffling constantly. NanoClaw and ZeroClaw already exist, more will follow, and a tool that only works for one of them is one architectural pivot away from irrelevance. The 30-day question is whether usage grows fast enough to justify expanding framework support. The 60-day question is whether the managed instance offering finds any traction, because that’s presumably how this eventually pays for itself. The 90-day question is whether the observability data being collected is good enough that someone builds something interesting on top of it.

What I’d want to know before fully endorsing it: how deep does the token cost tracking actually go, and does the memory change monitoring work reliably across session boundaries? Those are the hard parts of agent observability. The product website doesn’t get specific on either.

If you’re running OpenClaw agents and you’re not using this, you’re operating on vibes. That’s your call.